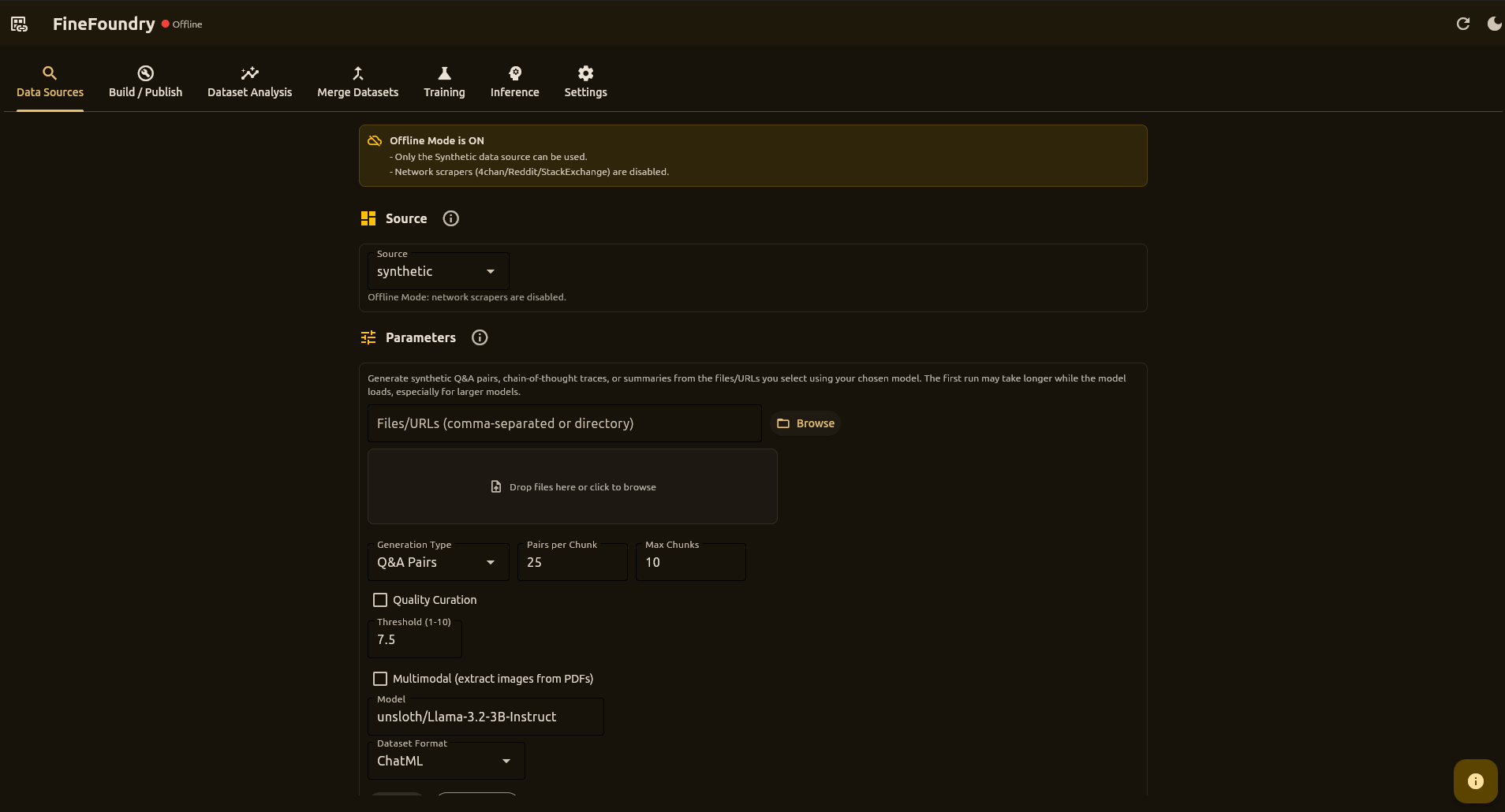

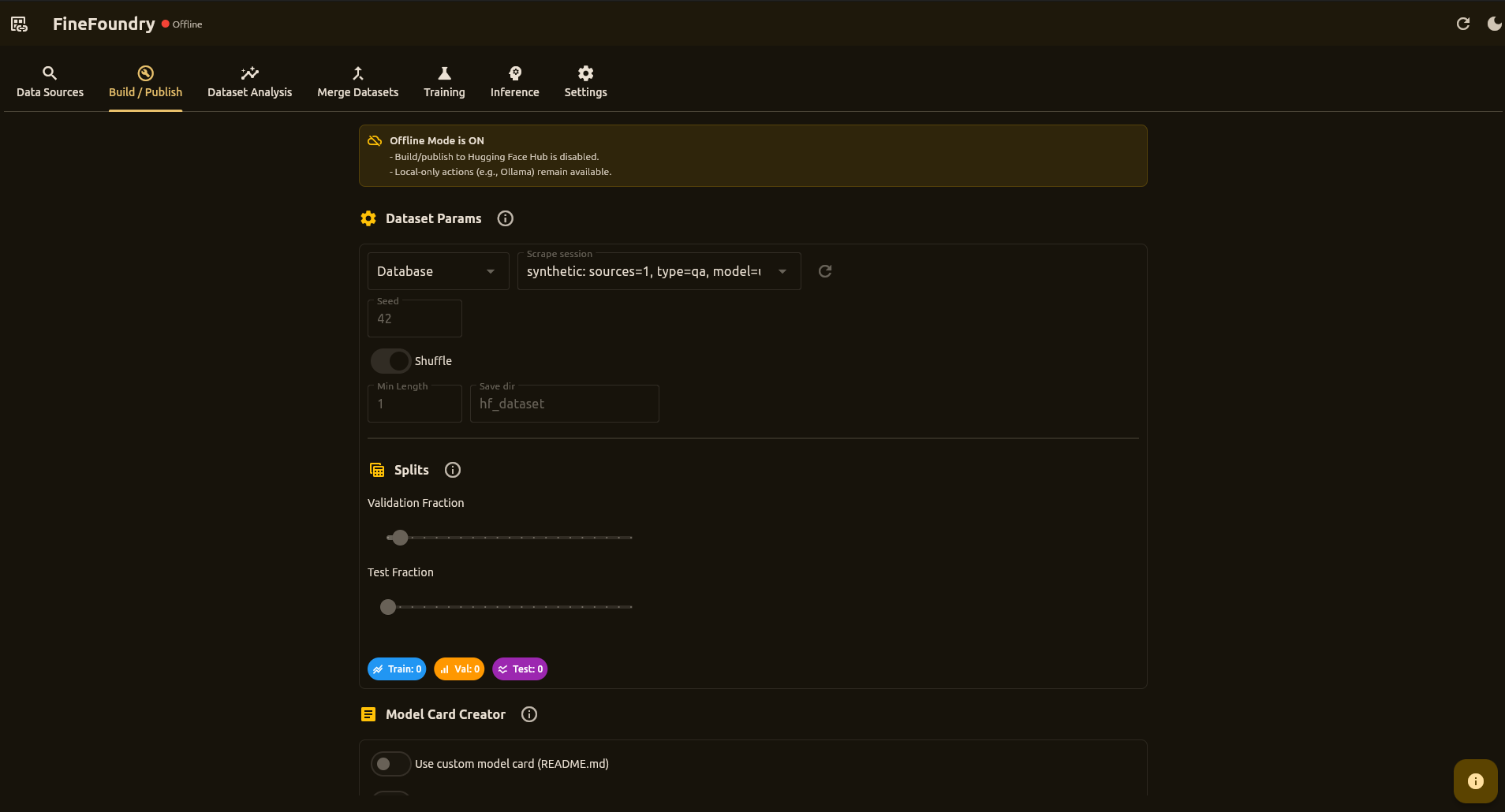

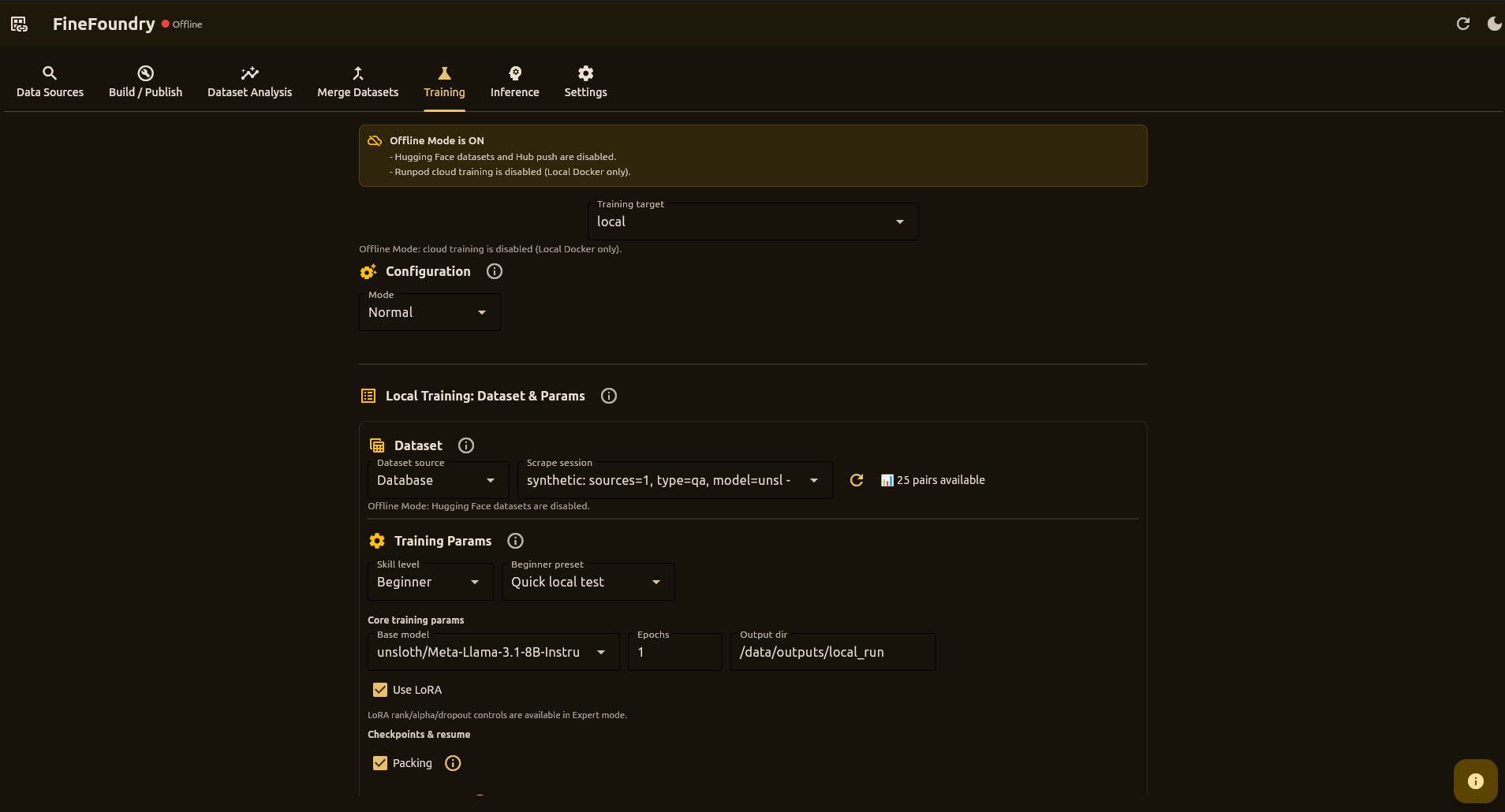

Create Your Own AI Models

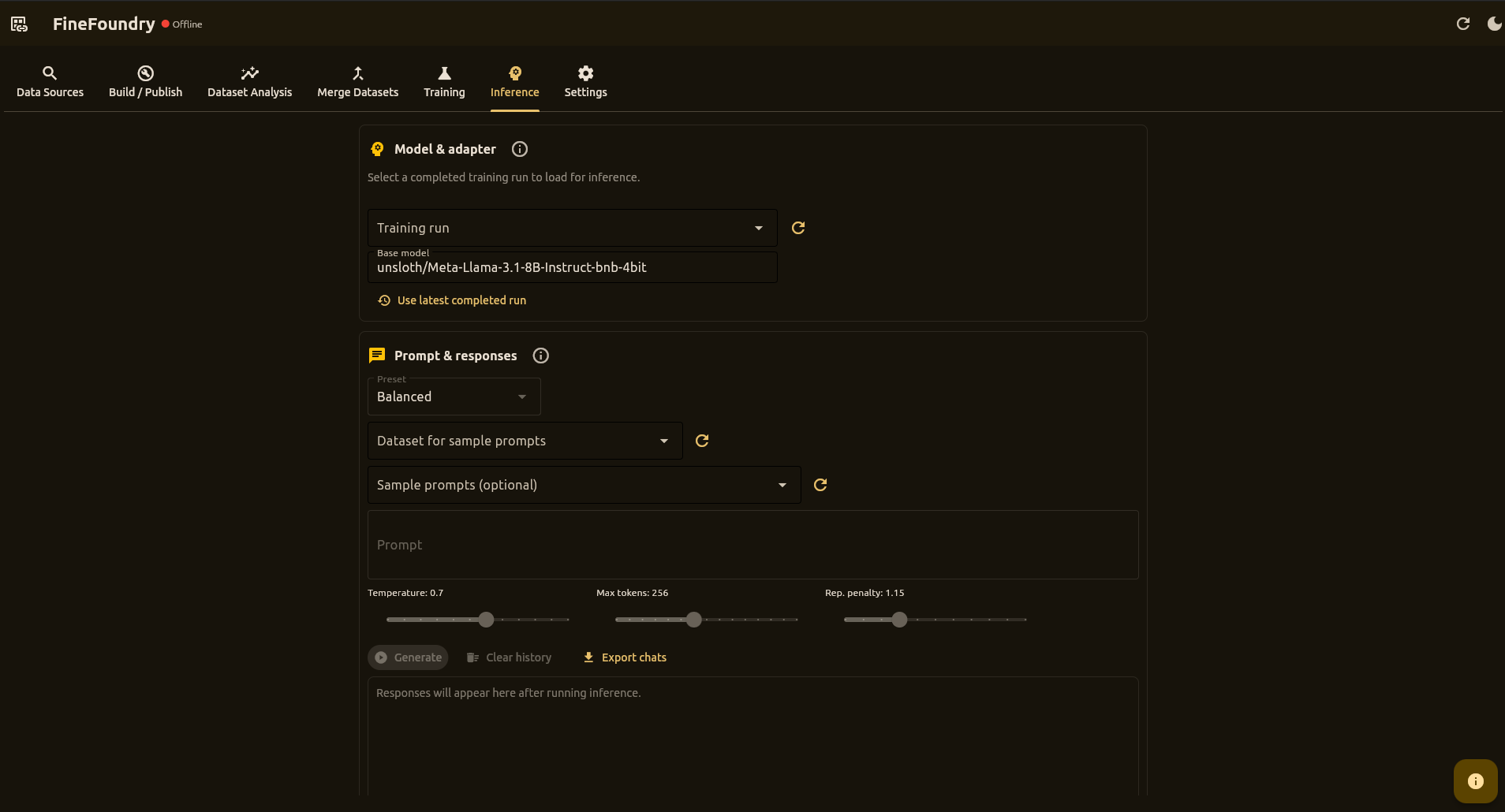

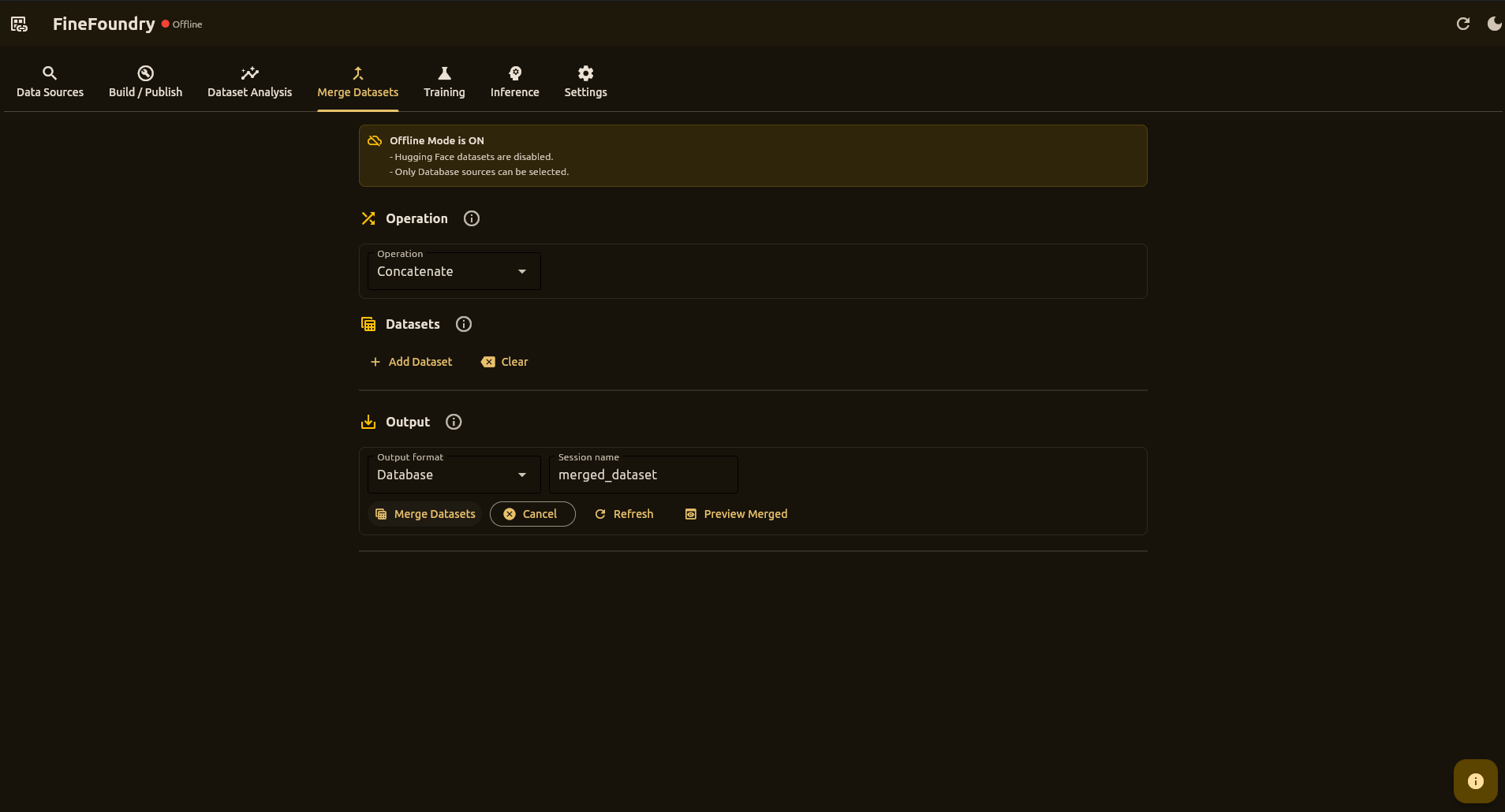

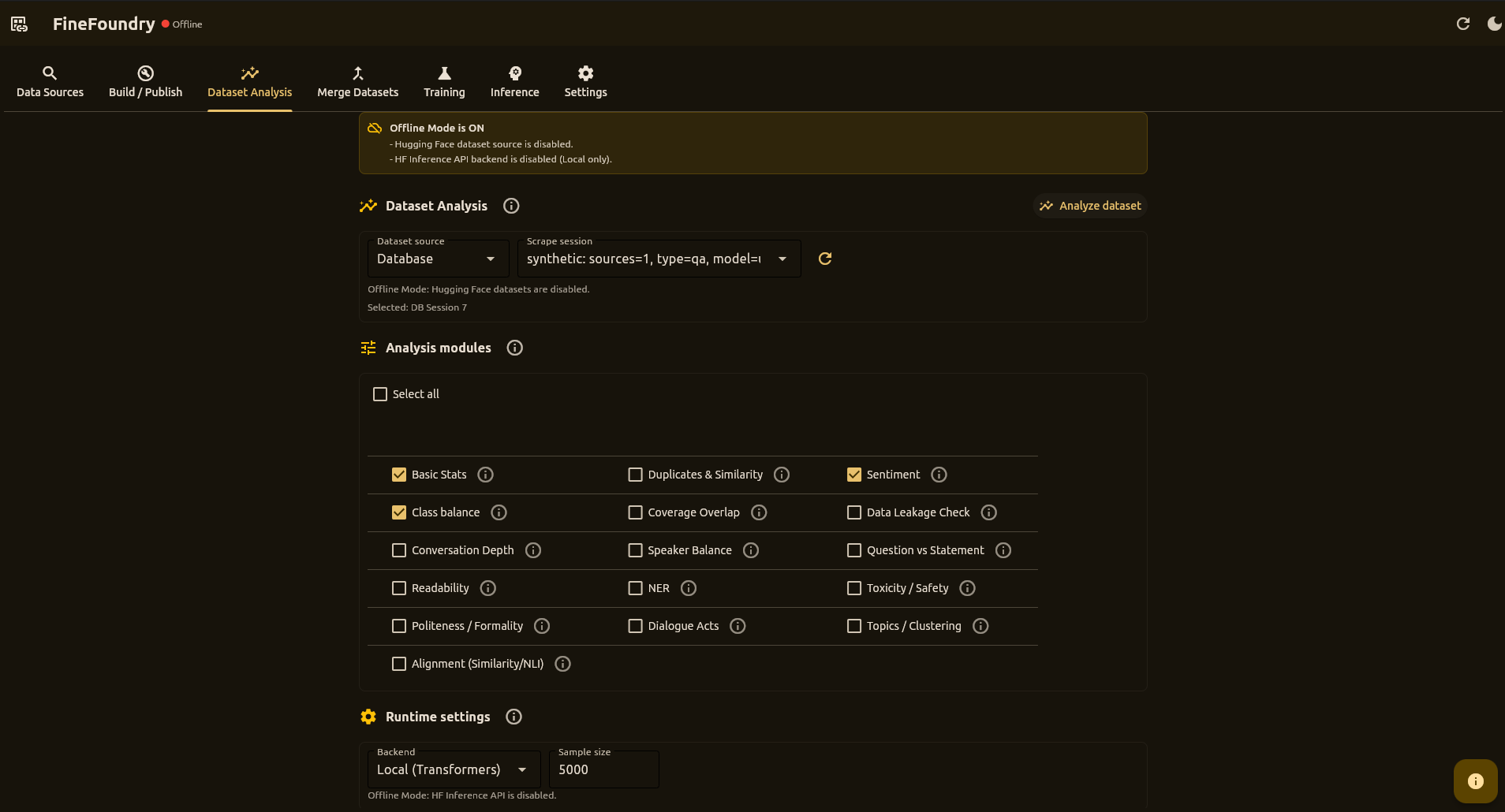

Build custom AI chatbots and assistants without writing code. Collect training data from the web or your own documents, teach AI models on your computer or in the cloud, and test the results by chatting with your creation. Everything happens in one easy-to-use desktop app.